Component 05

Monitoring and Adjustment

Implementation Steps

5.1 System Level

The system level monitoring and adjustment subcomponent focuses on the linkage between resource allocation decisions and the achievement of strategic goals and objectives. A well-defined monitoring process helps agencies diagnose information on factors that affect outcomes such as available funding and external economic, environmental and social trends. Refining agency monitoring processes, collecting additional data, and improved analysis capabilities provides new insights into causal factors contributing to performance. A key characteristic of this subcomponent is the application of performance monitoring information to identify where adjustments need to be made. These insights can be used in future planning and programming decisions. System level monitoring typically has a wider scope and a long-range time horizon. Understanding the relationship between actions and results can, in some instances, take years to assess. The following section outlines steps agencies can follow to establish system level monitoring and adjustment processes.

- Determine monitoring framework

- Regularly assess monitoring results

- Use monitoring information to make adjustments

- Establish an ongoing feedback loop to targets, measures, goals, and future planning and programming decisions

- Document the process

Step 5.1.1 System Level: Determine monitoring framework

The first step toward establishing a monitoring framework is to define what metrics are to be tracked, the frequency, and data sources. In addition, it is important to identify who needs to see the monitoring information—for what purpose and in what form? Monitoring efforts should take place regularly, with data collection and management ongoing, as discussed further in Component C: Data Management and Component D: Data Usability and Analysis. Developing a strategy for efficient monitoring and adjustment involves balancing the need for frequent information updates within the constraints of resource efficiency. Setting monitoring frequency should be done such that information is produced often enough to capture change. It should not be done so frequently that it creates extra unnecessary work, and not so infrequently that it misses early warning signs. Striking the right reporting frequency balance will take agencies time to figure out and will vary based on what is being monitored. Having the ability to vary monitoring frequency greatly enhances an agency’s capacity not only to respond to internal and external requests, but also to identify necessary planning and programming adjustments.

The typical system level monitoring runs on a long-range timeframe; it can be monthly up to a multi-year basis. This is because gaining an understanding of the linkage between resource allocation decisions and system performance results can take several years.

Items to keep in mind as the monitoring framework is being developed:

- Include at a minimum the performance measures used to assess progress toward strategic agency goals (Component 01). All elements of a transportation performance management approach need to connect back to the agency’s strategic direction and performance targets.

- Coordinate with other agency business. There will be opportunities to combine efforts with annual reports, plan updates, and other ongoing business processes. Efficiencies can be achieved by aligning with legislative or budgetary milestones.

- Expand monitoring capabilities through data partnerships. The sharing of data internally across agency departments and with external partners can greatly enhance an agency’s monitoring and adjustment capabilities.

- Identify data gaps. Once the monitoring metrics have been determined, determine the suitability of the available data and existing gaps (see Component D: Data Usability and Analysis). As the monitoring process matures, data needs will likely need to expand to improve the understanding of the causes behind progress or lack thereof.

- Clarify how monitoring needs vary by user. Identifying the range of monitoring-information users (e.g., performance analyst versus senior agency manager) will help determine the monitoring framework. (See Component C: Data Management).

- Establish close ties to reporting and communications efforts (see Component 06: Reporting and Communication).

Figure 5-4: Strategic Monitoring

Source: Federal Highway Administration

Examples

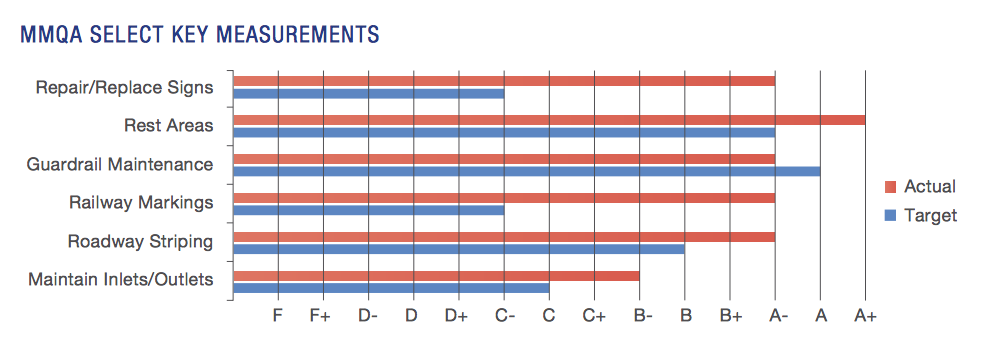

Within Utah DOT’s long-range transportation plan (LRTP), the agency assesses the attainment of each strategic goal. For example, under the goal of system preservation, the areas of pavement condition, bridge condition, and maintenance each have their own targets toward which plans and programs are strategized. UDOT has structured its monitoring framework such that an annual update, Strategic Direction 2015, requires monitoring checkpoints on performance measures and targets developed in the four-year LRP.1 Below, the Maintenance Division at UDOT reports its targets as well as yearly progress toward them (Figure 5-5).

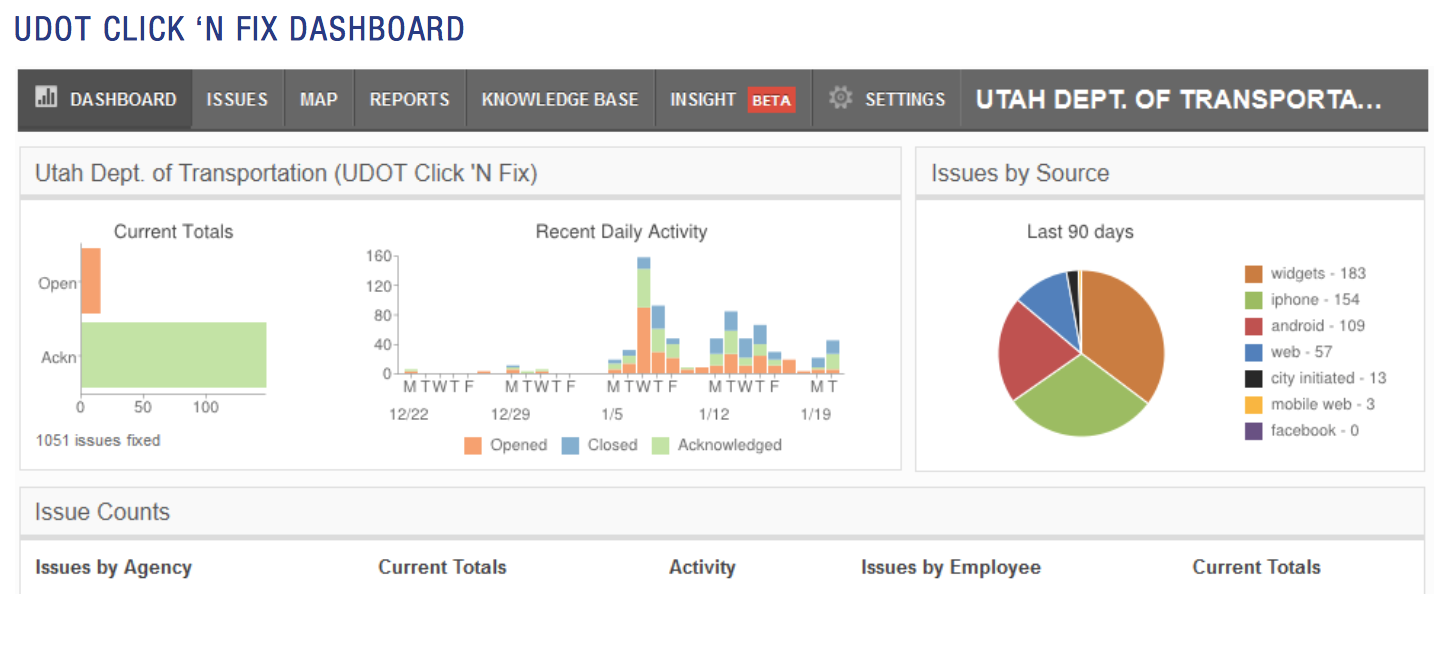

Figure 5-6 shows a view of UDOT’s Click ‘n Fix Dashboard that staff uses to track daily maintenance requests. Staff can see the number of reported issues on a day to day basis, and the interface also allows monitoring via maps and reports regarding completed or incomplete requests. The key here is the linkage back to the agency’s strategic goals and performance targets.

UDOT integrates annual monitoring efforts into its LRTP process in order to assess progress on a systemic level, and then also monitors on a programmatic level to assess progress toward performance targets within specific program areas, such as system preservation. The monitoring framework is set up so that there are yearly updates within performance areas, as well as the ability to check in still more frequently via a project-tracking dashboard.

Figure 5-5: MMQA Select Key Measurements: Projects Completed v. Targets

Source: Strategic Direction 20152

Figure 5-6: UDOT Click ‘n Fix Dashboard

Source: Strategic Direction 20153

Linkages to Other TPM Components

- Component 01: Strategic Direction

- Component 02: Target Setting

- Component 04: Performance-Based Programming

- Component 06: Reporting and Communication

- Component C: Data Management

- Component D: Data Usability and Analysis

Step 5.1.2 System Level: Regularly assess monitoring results

This step entails instituting a well-defined performance-monitoring process to understand past and current performance. At a minimum, an agency should review the performance trends for each measure developed under the Strategic Direction (Component 01). During this step, it is important to return to the internal and external factors at play that may have an impact on progress toward a goal. Factors might include ongoing public input, a shift in priorities, or a change in any of the many external or internal factors that might potentially impact the agency’s work (see Table 5.4 below). If ongoing monitoring reveals that an agency is falling short of a performance target, this might indicate that the target was not realistic, the strategies were not effective, or one factor or a combination of factors threw performance results off course. In this step, conduct performance diagnostics to understand system performance trends.

Source: Federal Highway Administration

| Internal | External |

|---|---|

| Funding | Economy |

| Staffing constraints | Weather |

| Data availability and quality | Politics/legislative requirements |

| Leadership | Population growth |

| Capital project commitments | Demographic shifts |

| Planned operational activities | Vehicle characteristics |

| Cultural barriers | Zones of disadvantaged populations |

| Agency priorities | Modal shares |

| Agency jurisdiction | Gas prices |

| Senior management directives | Land use characteristics |

| Policy directives (e.g., zero fatalities) | Driver behavior |

| Cross performance area tradeoffs | Traffic |

Below is a set of questions that can be used to start the performance diagnosis. While the specific questions will depend on the specific performance area, the following types of questions will generally be applicable:

- What is the current level of performance?

- How does it vary across different types of related measures (e.g., pavement roughness, rutting, and cracking)?

- How does it vary across different transportation system subsets (e.g., based on district, jurisdiction, functional class, ownership, corridor, etc.)?

- How does it vary by class of traveler (e.g., mode, vehicle type, trip type, age category, etc.)?

- How does it vary by season, time of day, or day of the week?

- Is observed performance representative of “typical” conditions or is it related to unusual events or circumstances (e.g., storm events or holidays)?

- How does our performance compare to others?

- How does it compare to the national average?

- How does it compare to peer agencies?

- How does the current level of performance compare to past trends?

- Are things stable, improving or getting worse?

- Is the current performance part of a regular occurring cycle?

- What factors have contributed to the current performance?

- What factors can we influence (e.g., hazardous curves, bottlenecks, pavement mix types, etc.)?

- How do changes in performance relate to general socio-economic or travel trends (e.g., economic downturn, aging population, lower fuel prices contributing to increase in driving)?

- How effective have our past actions to improve performance been (e.g., safety improvements, asset preventive maintenance programs, incident response improvement, etc.)?

Examples

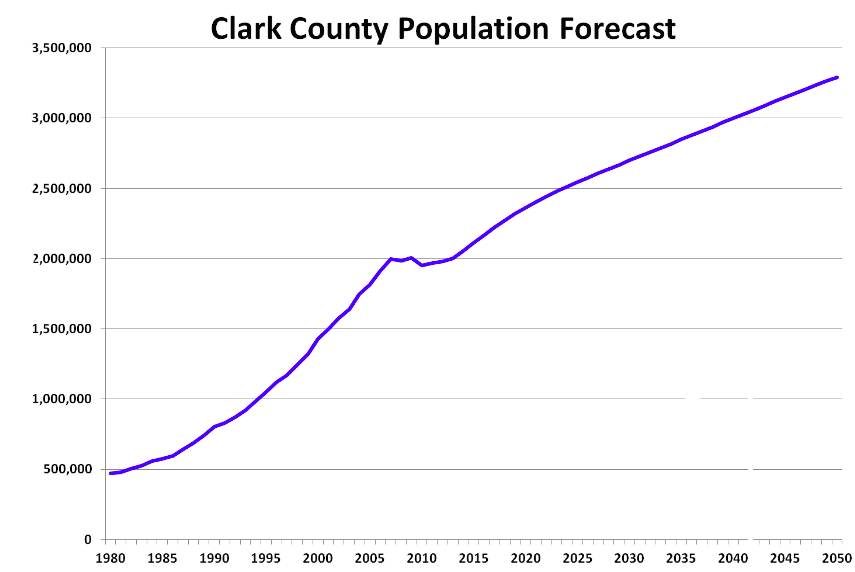

The Regional Transportation Commission (RTC) is the metropolitan planning organization (MPO) for Southern Nevada, including the Las Vegas Valley, and is tasked with identifying programs and projects to improve air quality, provide mobility options, and enhance transportation efficiency and safety. In monitoring how effective RTC strategies are in making progress toward the region’s nine goals, a key external factor RTC must consider is the fact that Southern Nevada continues to grow rapidly in terms of economy and population. This increases demands on the transportation system as a whole, while also compounding the complexities of funding it. While the recession impacted funding levels, it only slowed rather than stopped area growth, leading to an increased mismatch between available transportation financing and system needs.4 As a result of the potential impacts from these external factors, RTC has utilized a model to estimate regional economic and population growth developed by the University of Nevada Las Vegas’s Center for Business and Economic Research.

RTC coordinated the use of this model by local jurisdictions in the region, so that RTC can better predict travel demand, congestion increases, and air quality impacts5 and hence better understand the outcomes of their strategies and how the system is serving customers. By monitoring the demands on the system as well as its outcomes, RTC is better able to assess the financial needs for meeting those demands. As a result of the uncertainties caused by the rate of growth in the area and accompanying financial model complexity, RTC includes many “unfunded needs” projects in its program to reflect and track unmet needs over the course of the plan period. RTC recognizes how important external influences are in understanding the region’s ability to make progress toward its goals and objectives.

Figure 5-7: Clark County Population Growth Projection through 2050

Source: Southern Nevada Business Development Information: Population6

Linkages to Other TPM Components

- Component 01: Strategic Direction

- Component 02: Target Setting

- Component C: Data Management

- Component D: Data Usability and Analysis

Step 5.1.3 System Level: Use monitoring information to make adjustments

With a better understanding of past and current performance, agencies can isolate what causal factors they can influence and act on these new insights.

Items to keep in mind as monitoring information is used to consider adjustments:

- Passage of time. Has enough time passed to gain a true picture of progress? The trajectory of progress is not always a straight-line movement; more data points may be necessary to fully understand the trend. Often, momentum can build or can be impacted by external factors over the measurement timeframe.

- Constraints. Agencies may be hindered from making program and project adjustments by TIP and RTP amendment cycles, budget development timeline, legislative requirements (e.g., delivery of conformity model runs).

- Anomalies. Consider whether there were special circumstances driving the performance results. A single event or factor can have a sizable impact, so something atypical occurring, such as a natural disaster or unexpected funding change, can lead to erroneous conclusions if not adequately understood.

- Reliability of predicted performance improvements from adjustment. Before implementing any adjustments, agencies should analyze future performance. In general, predictive capabilities should allow agencies to compare the “do nothing” scenario versus the potential impacts of adjustment (see Component D: Data Usability and Analysis).

- “Sub-measures” that provide new insights into causal factors contributing to performance. A sub-measure is a detailed quantifiable indicator uncovered during monitoring that provides additional insights into internal and external processes (e.g., preventive maintenance compliance—a driver of overall asset performance).

After these considerations, determine whether course correction is necessary. A communications strategy should be in place to ensure that stakeholders are informed and up to date on monitoring results and their consequences. If changes are made, be sure that any new measures, goals, or targets are calibrated to the preceding ones to ensure continuity and comprehensible documentation.

Examples

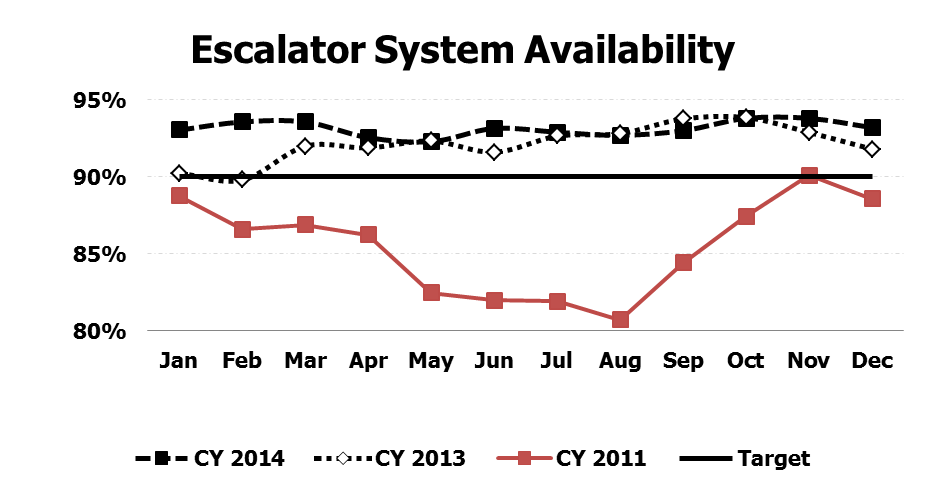

At the Washington Metropolitan Area Transit Authority (WMATA), escalator availability is a top priority of the agency’s customers. In 2011, the agency was suffering from very low escalator availability (Figure 5-8):

Figure 5-8: Escalator System Availability

Source: Adapted from Vital Signs Report: 2014 Annual Results7

Agency staff conducted a range of performance diagnostics to try and uncover the root cause of the dismal performance results. The analysis discovered a preventive maintenance compliance rate of 44%. Quickly this new sub-measure was regularly tracked and discussed during executive management meetings. WMATA put increased emphasis on preventive maintenance, conducting more proactive inspections to identify issues before problems occurred, concentrating on mechanic training, expanding quality control inspections before escalators were returned to service, and realigning maintenance staff into geographic regions designed to improve response times. The result was a notable increase in preventive maintenance compliance and improved escalator availability.

Linkages to Other TPM Components

- Component 01: Strategic Direction

- Component 02: Target Setting

- Component A: Organization and Culture

- Component C: Data Management

- Component D: Data Usability and Analysis

Step 5.1.4 System Level: Establish an ongoing feedback loop to targets, measures, goals, and future planning and programming decisions

This step creates the critical feedback loop between performance results and future planning, programming and target setting decisions. To create an effective feedback loop, the monitoring information and the effect of adjustments need to be integrated into future strategic direction development (Component 01) and the setting of performance targets (Component 02). Through an increased understanding of the linkage between resource allocation decisions and results, the monitoring and adjustment component uncovers information to be used in future planning (Component 03) and programming (Component 04) decisions. This component also helps agency staff link their day-to-day activities to results and ultimately agency goals (Component A: Organization and Culture). The external and internal reporting and communication products (Component 06) need to be based on the information gathered during monitoring and adjustment.

Figure 5-9: Feedback Loop

Source: Federal Highway Administration

Examples

A serious snow-related congestion event on February 9, 2014 on Colorado Interstate 70 turned a two-hour drive on I-70 into an eight-to 10-hour journey.8 This event became a catalyst for the Colorado Department of Transportation (CDOT) to reexamine its maintenance and operations practices on this busy corridor. CDOT also engaged in an extensive monitoring of the corridor’s mobility and safety results.

Because of this, the agency determined that the current level of performance on the corridor was not acceptable and made the following adjustments:

- Infrastructure. Colorado DOT widened the east and westbound Twin Tunnels, the first improvements along the corridor in 40 years.

- Operations. Colorado DOT invested $8 million to implement strategies such as additional plow drivers, snowplow escorts on the Eisenhower Tunnel approach, and ramp traffic metering at key locations.

- Public Education. Colorado DOT launched a public education campaign, Change Your Peak Drive, and worked with partners and other stakeholders to educate the public on driver behavior issues such as having good tires, driving safely around plows, traveling during off-peak times, and finding information such as broadcasted radio updates, and carpooling.9

The Division of Highway Maintenance was also given an elevated leadership role in coordinating capital and annual maintenance. It received additional staff support to accomplish this, with Directors of Operations assigned to each corridor, and maintenance crews and equipment pledged from other areas of the state for the winter. Additionally, in order to make the improvements real to the public, assist in monitoring efforts, and measure outcomes of this shift, Maintenance and Operations leadership began developing milestones and metrics around new objectives related to improved mobility on I-70 and I-25. This was assisted by departmental efforts to improve data gathering efforts and provide more accurate time measurements for closures, delays, and causes of delay.10

Aligned with this systemic shift, the improvements to I-70 are specifically called out in the January 9, 2015 Action Plan for implementation and are further discussed below.11 In addition, a key mobility goal within the Strategic Actions developed for the Statewide Plan specifically calls for the development of Regional Operations Implementation Plans, Corridor Operations Plans, and tools to focus resources and solve issues at the regional and corridor levels.12

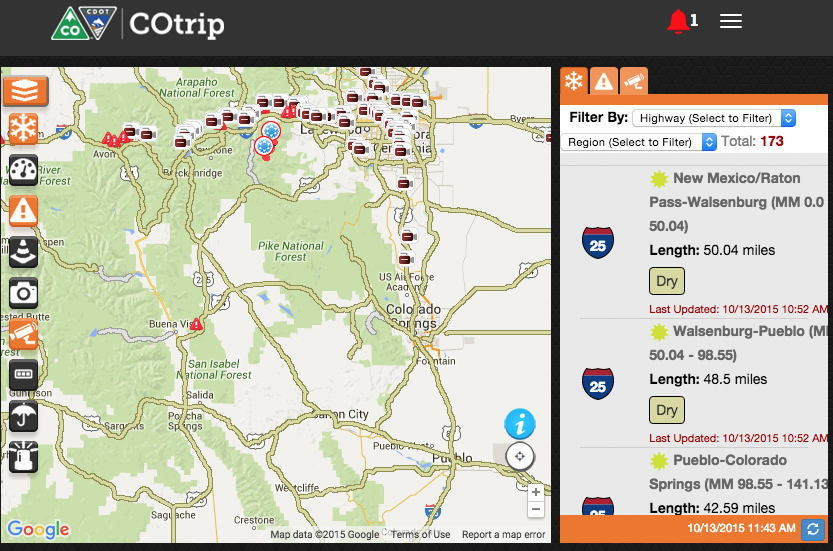

In June 2015, Colorado DOT revealed the performance improvements that had occurred as a result of these efforts over the course of Winter 2015, demonstrated by before and after mobility and safety measurements on I-70. The agency found that injuries and fatal crashes were reduced by 35%, and weather-related crashes were reduced by 46%. Unplanned closure time decreased by 16%; the number of hours of eastbound delay greater than 75 minutes was decreased by 26%.13 Further efforts will continue to be developed, such as training for corridor first responders, defining performance measures for traffic incident clearance, and establishing a schedule of routine incident debriefings and performance assessments.14 COtrip, an online interface offering live camera monitoring, incident monitoring, and real time road conditions was launched to assist in communicating conditions to users as well as aid monitoring efforts.

Figure 5-10: CO Trip User Interface

Source: Colorado Department of Transportation15

Colorado DOT’s actions on I-70 illustrates actions taken to adjust targets, prioritize projects, and allocate resources after the February 2014 serious weather and congestion event caused delays that impacted mobility performance to an unacceptable degree. This has been documented and incorporated into priorities for Colorado DOT’s upcoming update to its Statewide Transportation Plan. Moving forward, monitoring of performance on these corridors will reveal any change in outcomes due to this shift in operations and resources, or may reveal further opportunities for improvement.

Linkages to Other TPM Components

- Component 01: Strategic Direction

- Component 02: Target Setting

- Component 03: Performance-Based Planning

- Component 04: Performance-Based Programming

- Component 06: Reporting and Communication

- Component A: Organization and Culture

- Component C: Data Management

- Component D: Data Usability and Analysis

Step 5.1.5 System Level: Document the process

Document the process, including progress, outputs, outcomes, and any strategic adjustments and the reasoning behind these. This includes documentation for the purposes of internal operations, ensuring that the monitoring and adjustment process is replicable in future iterations of plans and throughout multiple planning efforts. It also includes steps toward gathering and organizing data (see Component C: Data Management and Component D: Data Usability and Analysis) in order to ensure that external reporting (Component 06: Reporting and Communication) can be carried out in a sustainable and impactful way.

Examples

Several examples are offered here to illustrate how strategic level monitoring and adjustment processes and any subsequent changes to goals and targets are documented.

Program Delivery Monitoring at Southwestern Pennsylvania Commission

The Southwestern Pennsylvania Commission (SPC) offers a large amount of documentation regarding each individual program area’s monitoring and adjustment processes. As an example, within its congestion management program, SPC implements strategies under divisions of demand management, modal options, operational improvements, and capacity improvements. SPC documents all of the performance measurements and associated monitoring calculations directly on its website.16 Gathered here are all the associated studies, reports, and other tools SPC uses to highlight, analyze, and evaluate the effectiveness of various congestion management strategies implemented.17 As an example within this program, HOV lanes are listed as one strategy implemented to help reach congestion goals in the SPC region. SPC documents the reasoning behind the strategy and its relationship to the agency’s congestion targets. Before and after analysis is completed using results from monitoring traffic delay, and detailed information is included as to how calculations were reached and compared. This ensures that the same monitoring process can be reproduced indefinitely, allowing ongoing understanding of how investment in HOV lanes has enabled SPC to progress toward its congestion reduction target and its mobility goals.18

Program Delivery Monitoring at Missouri DOT

In the last decade, faced with increasing costs and decreasing revenue streams, the Missouri Department of Transportation (MoDOT) revisited its pavement management program. Based on financial constraints, the agency decided to focus its efforts on improving major highways, rather than spreading resources out over minor roads as well, as had been done according to a past formula. MoDOT established a target that would benefit the most users per dollar spent and relaxed its target for overall pavement condition that included minor roads. As a result of this adjustment, fewer resources were allocated to the preservation of minor roads, and the percentage of minor roads in good condition decreased from 71% to 60% from 2005 to 2009.19 At the same time, however, MoDOT was able to respond to customers’ desires for smoother roads by significantly improving the condition of major routes, from 47% in 2004 to 85% in 2007. Currently over 89% of major highways are in good condition, but MoDOT recognized that this condition level would be difficult to maintain without additional resources.20 MoDOT used its Tracker performance measurement tool to document this adjustment to its performance targets and measures, and to monitor and report the results, which are released quarterly.

Documenting the decision to focus more resources on major routes rather than on the system overall was key to MoDOT’s ability to measure progress moving forward and also to ensure stakeholders understood the adjustment. MoDOT measures its progress not only with typical performance measures, but also through regular customer satisfaction surveys and focus groups to determine whether improvement projects are making the anticipated progress toward a satisfactory user experience—therefore communicating this strategy back to users using monitoring data was critical.21 This documentation shows how the programs and projects implemented as MoDOT’s pavement strategies are intended to impact progress toward performance targets.

Linkages to Other TPM Components

5.2 Program/Project Level

The purpose of this subcomponent is to establish a process for tracking program and project outputs, and the effect of programs and projects on performance outcomes. This process provides early warning of potential inability to achieve performance targets. Insights are used to make project or program “mid-stream” adjustments and guide future programming decisions. The following section outlines steps agencies can follow to establish program/project level monitoring and adjustment processes. While the step names are identical, descriptions of monitoring activities within each step vary.

- Determine monitoring framework

- Regularly assess monitoring results

- Use monitoring information to make adjustments

- Establish an ongoing feedback loop to targets, measures, goals, and future planning and programming decisions

- Document the process

“A performance-based approach shifts the focus off of ‘can we deliver the project on budget’ to ‘are we doing the right set of projects.’ Monitoring and adjustment processes help us understand project results – information that is key to picking an effective set of projects year after year to maximize taxpayer investment into the system by focusing on projects that truly drive a better and safer outcome.”

Source: Greg Slater, MD State Highway Administration

Step 5.2.1 Program/Project Level: Determine monitoring framework

The first step toward establishing a monitoring framework is to define what metrics are to be tracked, the frequency, and data sources. In addition, it is important it identify who needs to see the monitoring information—for what purpose and in what form. Monitoring efforts should take place regularly, with data collection and management ongoing, as discussed further in Component C: Data Management

and Component D: Data Usability and Analysis. Developing a strategy for efficient monitoring and adjustment involves balancing the need for frequent information updates within the constraints of resource efficiency. Monitoring frequency should produce information often enough to capture change, yet not so frequently that it creates extra unnecessary work, and not so infrequently that it misses early warning signs. Striking the right reporting frequency balance will take time to figure out and will vary based on what is being monitored. Having the ability to vary monitoring frequency will greatly enhance an agency’s capacity not only to respond to internal and external requests, but also to identify necessary planning and programming adjustments.

The typical program/project level monitoring ranges from ‘up-to-the-minute’ to a yearly basis. To assess the effectiveness of programs and projects, annual updates should occur at a minimum, with regular internal check-ins a must for understanding if projects are being delivered on time and within scope. However, gaining an understanding of the effect strategies are having on performance results may take longer.

Items to keep in mind as the monitoring framework is being developed:

- Link metrics used in monitoring to strategic direction. All elements of a transportation performance management approach need to connect back to the agency’s strategic direction and performance targets.

- Coordinate with other agency business. There will be opportunities to combine efforts with annual reports, plan updates, and other ongoing business processes. Efficiencies can be achieved by aligning with legislative or budgetary milestones.

- Expand monitoring capabilities through data partnerships. The sharing of data internally across agency departments and with external partners can greatly enhance an agency’s monitoring and adjustment capabilities.

- Identify data gaps. Once the monitoring metrics have been determined, determine the suitability of the available data and existing gaps (see Component D: Data Usability and Analysis). As the monitoring process matures, data needs will likely need to expand to improve the understanding of the causes behind progress or lack thereof.

- Clarify how monitoring needs vary by user. Identifying the range of monitoring information users (e.g., performance analyst versus senior agency manager) will help determine the monitoring framework (see Component C: Data Management).

Figure 5-11: Strategic Monitoring

Source: Federal Highway Administration

Examples

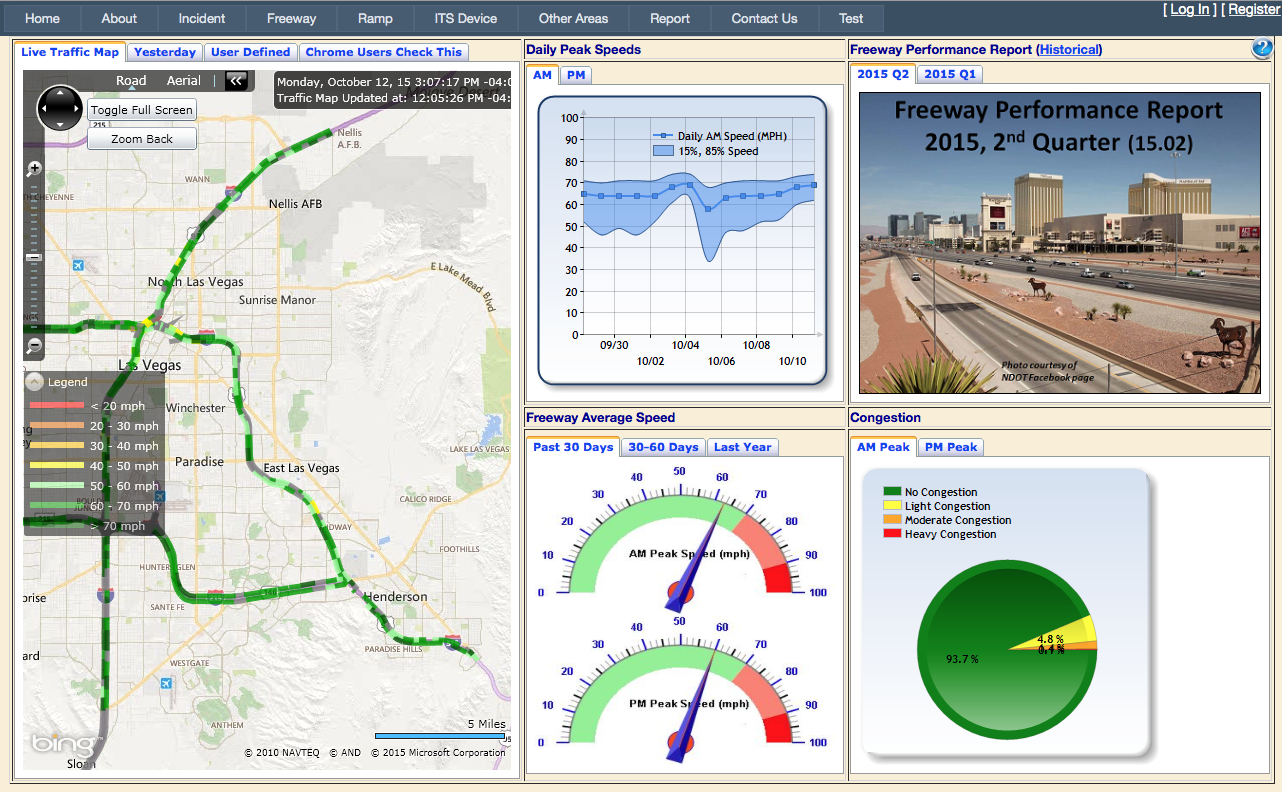

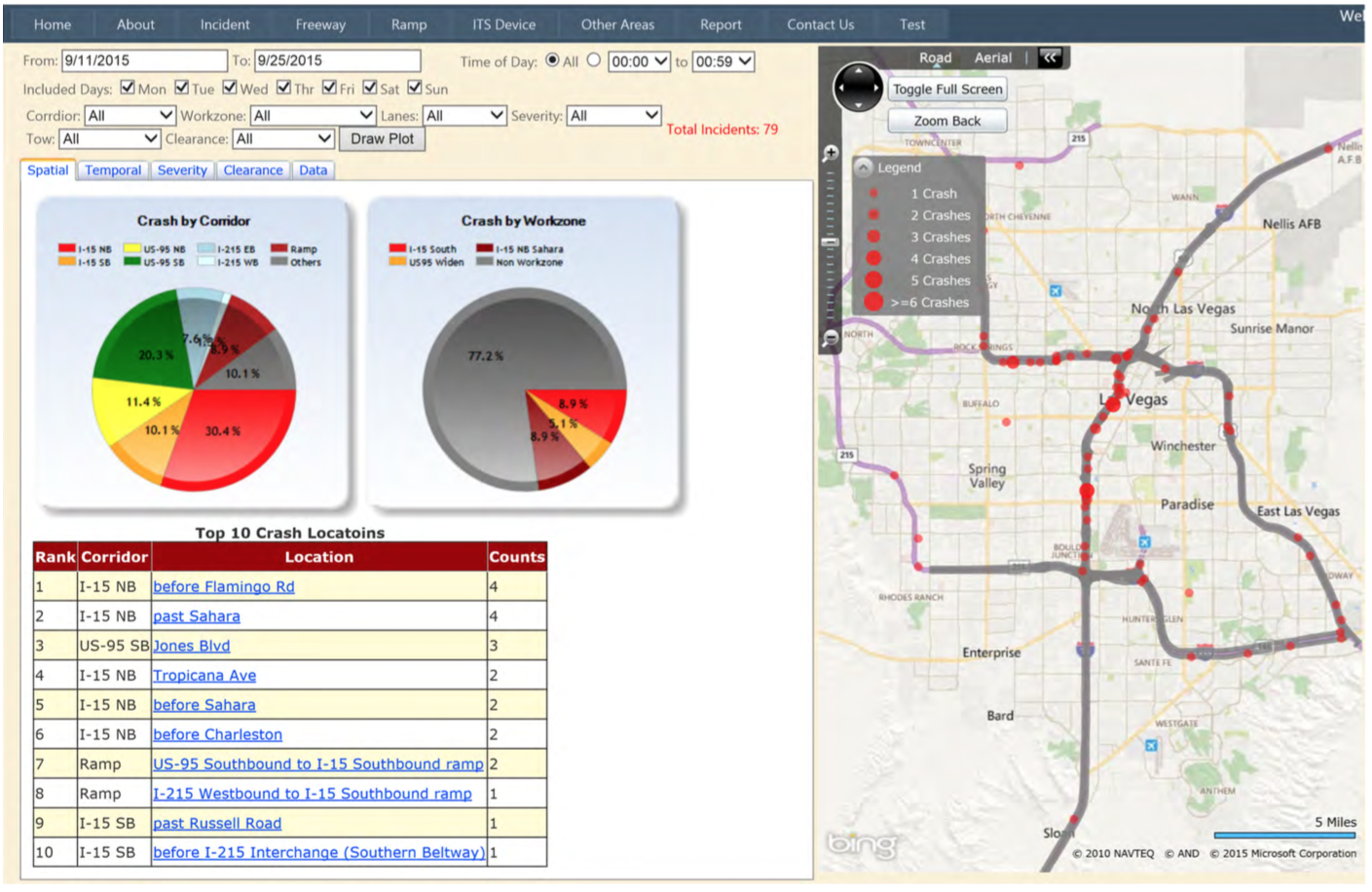

The FAST system (Freeway and Arterial System of Transportation) is a comprehensive monitoring effort that develops, implements, and maintains an Intelligent Transportation System (ITS) administered by the Regional Transportation Commission (RTC) in conjunction with the Nevada Department of Transportation (NDOT). Nevada’s ITS includes coordinated traffic monitoring cameras, signal timing, and a portfolio of projects such as ramp metering and informative signage aimed at reducing congestion and improving user experience along major corridors throughout the region. Using FAST to monitor Southern Nevada’s major corridors, RTC can devise mobility improvements without relying solely on system expansion, and can better prioritize the most impactful programs and projects based on performance measures.22 FAST helps RTC define and track progress toward meeting performance targets, which ultimately defines specific project needs and impacts such as maintenance, critical missing links and capacity needs.23

FAST is an award-winning real-time monitoring dashboard that enables detailed analysis on request.24 The dashboard displays feeds from cameras to track congestion along the corridors. This interface is monitored by RTC staff to develop quarterly reports on congestion events and understand historic patterns. The system archives thousands of screen shots of traffic camera feeds every few seconds. This means that RTC staff can perform analysis immediately to understand the impacts of a particular event. A screenshot of the dashboard is shown below. A live map is available on the left hand side; average speeds analysis for the past 30 days is displayed in the middle; and the latest quarterly reports and a peak congestion index appear at the right. By signing in, users can perform historic analysis to determine what the impacts of a particular event or project might be, whether it is a parade, construction, or a serious crash.

Figure 5-12: NDOT Coordinated Traffic Monitoring Interface

Source: RTC FAST Dashboard 25

When an incident is detected by the ITS system, FAST operators flag the location on a live map, which automatically inputs temporal and spatial information about the incident and provides an area for an operator to input any additional data on the incident. Then, snapshots of the incident location as well as upstream and downstream locations are archived at 15-second intervals so that staff can have a visual reference and a timestamp for incident impacts and clearance rates.26

As an example, recent analysis of incidents on FAST revealed the impacts of large downtown conventions on the traffic patterns of Las Vegas’s major corridors. Closely examining these patterns will enable RTC and partners in NDOT and the Metropolitan Police to better manage such large events and the traffic demands they entail. This includes the impact of police traffic direction, which assists by prioritizing access to and from event locations, but also contributes to corridor delays and beyond.

The detailed historic analysis enabled by FAST also shows congestion event and crash trends and helps RTC identify potential interventions. By providing historic performance data, FAST aided in making decisions, such as whether a full weekend closure or revolving weekday closures will cause less adverse effect when planning for a major construction project with NDOT. FAST can also pinpoint locations for safety interventions. When an expansion project on I-15 resulted in an increased number of crashes and delays, FAST pinpointed where restriping was needed to alleviate the issue. A snapshot of crash by corridor analysis is shown below.

Figure 5-13: NDOT Coordinated Traffic Monitoring Congestion Analysis

Source: RTC FAST Camera Snapshot Wall 27

FAST enables staff to determine location of, and then monitor the impacts of, smart fixes such as ramp metering, restriping, enhanced or interactive signage, and directly report progress toward RTC’s congestion reduction and safety enhancement goals.

Linkages to Other TPM Components

- Component 01: Strategic Direction

- Component 02: Target Setting

- Component 04: Performance-Based Programming

- Component C: Data Management

- Component D: Data Usability and Analysis

Step 5.2.2 Program/Project Level: Regularly assess monitoring results

Using the monitoring framework, this step entails conducting performance diagnosis to determine root causes of the observed performance results (e.g., correlating traffic incidents with travel speed data; breaking down crash data by contributing factors recorded in crash records or highway inventories). Part of performance diagnosis means an examining and understanding of the factors impacting the effect programs and projects have on performance results. See below for a list of examples by TPM performance area (Table 5-4). If ongoing monitoring reveals that an agency is falling short of a performance target, this might indicate that the target was not realistic, the strategies were not effective, or one factor or a combination of factors threw performance results off course. In this step, analyze before and after performance results, in order to make a diagnosis.

Source: Federal Highway Administration

| TPM Area | Explanatory Variables |

|---|---|

| General | Socio-economic and travel trends |

| Bridge Condition | Structure type and design Structure age Structure maintenance history Waterway adequacy Traffic loading Environment (e.g., salt spray exposure) |

| Pavement Condition | Pavement type and design Pavement age Pavement maintenance history Environmental factors (e.g., freeze-thaw cycles) Traffic loading |

| Safety | Population Traffic volume and vehicle type mix Weather (e.g., slippery surface, poor visibility) Enforcement Activities (e.g., seat belts, speeding, vehicle inspection) Roadway capacity and geometrics (e.g., curves, shoulder drop off) Safety hardware (barriers, signage, lighting, etc.) Speed limits Availability of emergency medical facilities and services |

| Air Quality | Stationary source emissions Weather patterns Land use/density Modal split Automobile occupancy Traffic volumes Travel speeds Vehicle fleet characteristics Vehicle emissions standards Vehicle inspection programs |

| Freight | Business climate/growth patterns Modal options – cost, travel time, reliability Intermodal facilities Shipment patterns/Commodity flows Border crossings State regulations Global trends (e.g., containerization) |

| System Performance | Capacity Alternative routes and modes Traveler information Signal operations/traffic management systems Demand patterns Incidents Weather Special Events |

Below are a set of questions that can be used to start the performance diagnosis. While the specific questions will depend on the performance area you are looking at, the following types of questions will generally be applicable:

- What outputs have been produced as a result of the examined program or project (e.g., the miles of pavement repaved, the number of bridges rehabilitated, the number of new buses purchased)?

- What is the current level of performance?

- Is observed performance representative of “typical” conditions or is it related to unusual events or circumstances (e.g., storm events or holidays)?

- How does the current level of performance compare to past trends?

- Are things stable, improving or getting worse?

- Is the current performance part of a regular occurring cycle?

- What factors have contributed to the current performance?

- What factors can we influence (e.g., hazardous curves, bottlenecks, pavement mix types, etc.)?

- How do changes in performance relate to general socio-economic or travel trends (e.g., economic downturn, aging population, lower fuel prices contributing to increase in driving)?

- How effective have our past actions to improve performance been (e.g., safety improvements, asset preventive maintenance programs, incident response improvement, etc.)?

Examples

Monitoring Winter Maintenance Practices: Rhode Island

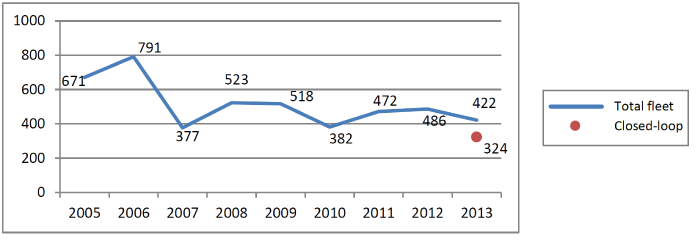

The Rhode Island Department of Transportation (RiDOT) is committed to reducing winter costs and alleviating environmental concerns related to its winter maintenance practices. In monitoring winter maintenance spending, RIDOT discovered a key driver of increasing costs was the use of salt products to treat roadways during winter storms. A potential solution, the installation of “closed-loop” systems in state-owned snowplows, was proposed by RIDOT staff. Closed-loop controllers provide more uniform salt and sand application and computerized data tracking resulting in reduction in material usage as compared to conventional spreaders. Closed-loop controllers would also enable RIDOT personnel to track material usage and application rates in specific locations.

RIDOT staff used the historical analysis of cost-drivers of the winter maintenance program and predicted savings from the closed-loop module to convince the budget office to let the agency use future savings to covert a portion of the winter vehicles to a “closed-loop” system. Once 20-30 percent cost savings was observed from lower salt usage (see figure below), RIDOT staff gained approval to install the equipment on 100 percent of the fleet. The understanding of a key driver of winter maintenance costs has allowed RIDOT to drive down roadway salt application by more than 27 percent over the past seven years.28

Figure 5-14: RiDOT Winter Fleet: Average Pounds of Salt Per Lane Mile

Source: RiDOT Performance Report 29

Linkages to Other TPM Components

- Component 01: Strategic Direction

- Component 02: Target Setting

- Component C: Data Management

- Component D: Data Usability and Analysis

Step 5.2.3 Program/Project Level: Use monitoring information to make adjustments

This step highlights the importance of actively using monitoring information to obtain key insights into the effectiveness of programs and projects and identify where adjustments need to be made.

Items to keep in mind as monitoring information is used to consider adjustments:

- Passage of time. Has enough time passed to gain a true picture of progress? The trajectory of progress is not always a straight-line movement; more data points may be necessary to fully understand the trend. Often, momentum can build or can be impacted by external factors over the measurement timeframe.

- Constraints. Agencies may be hindered from making program and project adjustments by TIP and RTP amendment cycles, budget development timeline, and legislative requirements (e.g., delivery of conformity model runs).

- Anomalies. Consider whether there were special circumstances driving the performance results. A single event or factor can have a sizable impact; if something atypical occurred such as a natural disaster or unexpected funding change, attempt to fully understand potential impacts to avoid making erroneous conclusions.

- Reliability of predicted performance improvements from adjustment. Before implementing any adjustments, agencies should analyze future performance. In general, predictive capabilities should allow agencies to compare the “do nothing” scenario versus the potential impacts of adjustment (see Component D: Data Usability and Analysis)

After these considerations, determine whether a course correction is necessary. A communications strategy should be in place to ensure that stakeholders are informed and up to date on monitoring results and their consequences. If there are any changes, be sure that any new measures, goals, or targets are calibrated to the preceding ones to ensure continuity and understandable documentation.

Examples

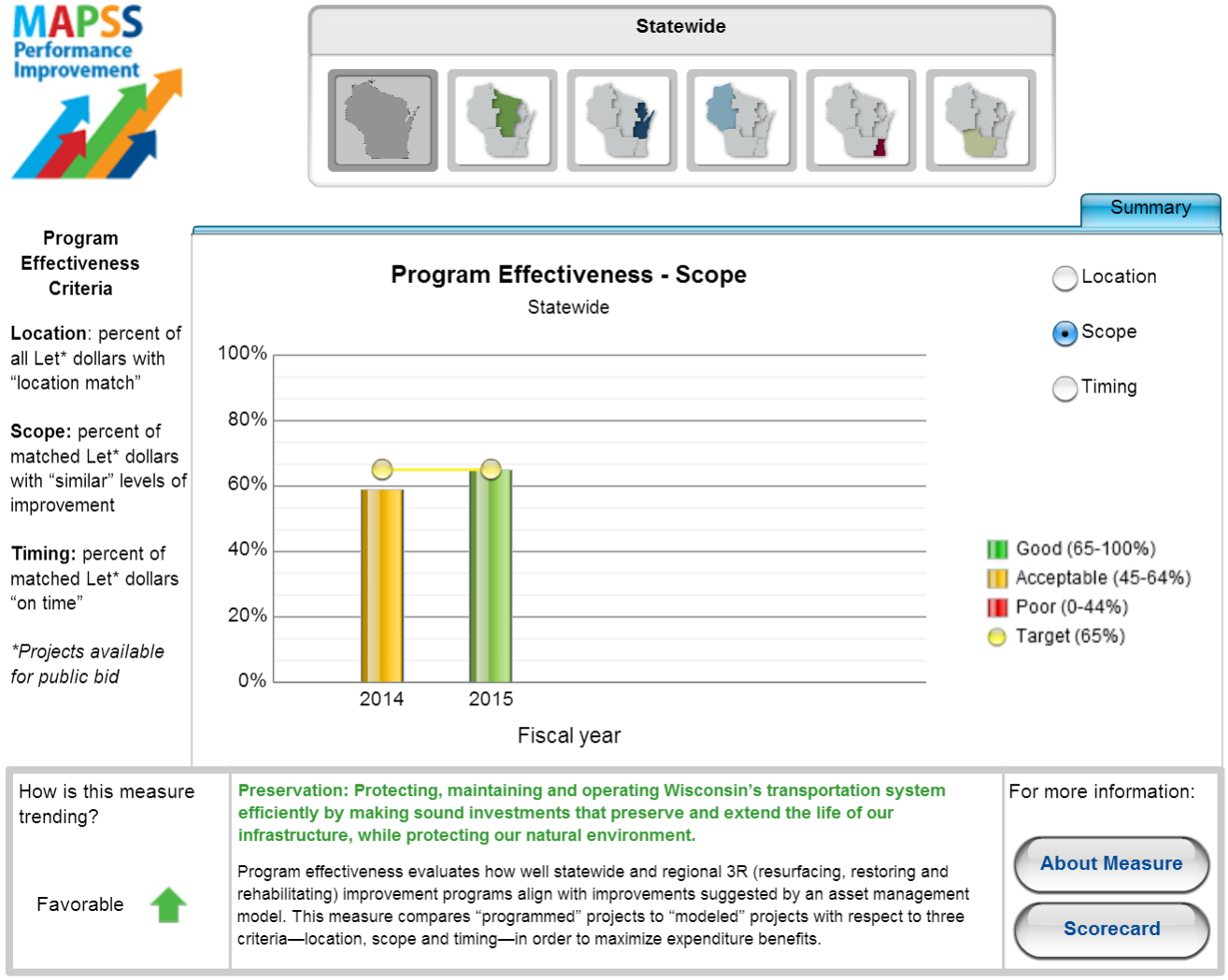

Program Effectiveness Measure: WisDOT

The Wisconsin DOT uses a measure called Program Effectiveness to assess how improvement programs align with the agency’s asset management model and performance-based plans. The measure is reported annually, and can be broken down into regions of the state and by location, scope, and timing of projects in reference to the model. Levels of performance are clearly indicated by color in the chart.30

Figure 5-15: WisDOT Regional Performance Effectiveness Scoring

Source: WisDOT31

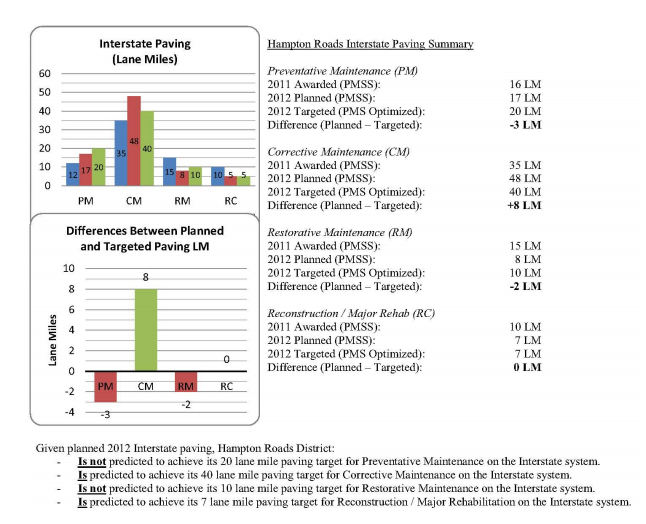

Pavement Management Adjustments: Virginia DOT

Virginia DOT (VDOT) uses a commercial Pavement Management System (PMS) with a companion pavement maintenance scheduling system tool (PMSS) to provide early warning of target non-attainment. This analysis is based on the status of planned paving projects, with the most recent pavement condition assessments and predicted pavement deterioration based on PMS performance models. The figure below illustrates one of the reports used to summarize planned versus targeted work by highway system class and treatment type. VDOT tracks project delivery and results on a statewide and district level. If issues are identified, VDOT makes adjustments to get back on track with predicted network-level pavement performance.

Figure 5-16: VDOT Pavement Maintenance Scheduling System Tool (PMSS)

Source: VDOT32

Linkages to Other TPM Components

- Component 01: Strategic Direction

- Component 02: Target Setting

- Component A: Organization and Culture

- Component C: Data Management

- Component D: Data Usability and Analysis

Step 5.2.4 Program/Project Level: Establish an ongoing feedback loop to targets, measures, goals, and future planning and programming decisions

This step creates the critical feedback loop between performance results and future planning, programming, and target setting decisions. To create an effective feedback loop, the monitoring information gathered and adjustments made to programs and projects need to be integrated into future strategic direction development (Component 01) and the setting of performance targets (Component 02). Through an increased understanding of the effect of specific projects and programs on outcomes, the monitoring and adjustment component uncovers information to be used in future planning (Component 03) and programming (Component 04) decisions. This component also helps agency staff link their day-to-day activities to results and ultimately agency goals (Component A: Organization and Culture). The external and internal reporting and communication products (Component 06) need to be based on the information gathered during monitoring and adjustment.

Figure 5-17: Feedback Loop

Source: Federal Highway Administration

Examples

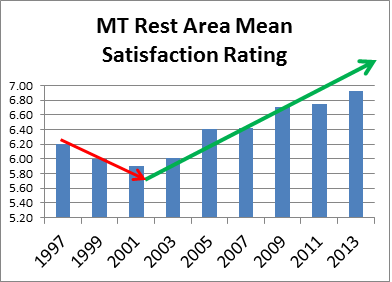

As in other states, many of Montana Department of Transportation’s (MDT) 49 state-maintained rest area facilities are at or nearing the end of their useful life, requiring substantial investment to remain operational. Though these facilities are expensive to build, operate, and maintain, the travelling public expects available, safe, clean rest stops. However, when rest area needs were placed side-by-side with roadways, these needs would often go unfunded, resulting in some rest areas being closed.

To address this challenge, MDT established a rest area usage monitoring effort. For every facility in the state, MDT maintenance forces installed door counters ($250) at rest area entrances, installed potable water (non-irrigation) ($250) and wastewater (effluent flow meters) meters ($750) to create a time series data set and inform sound future investments. Usage determines all things – and reliable data means MDT could design and construct the right size facility, water supply, wastewater treatment system, parking lot, number of stalls, etc. MDT also better used and evaluated mainline traffic counts, especially permanent counters, to improve usage correlations to peak usage (time of year, time of day, etc.). The information gathered from these monitoring efforts and public complaints about rest areas triggered a series of rest area improvements being initiated even when competing with larger highway projects. The focused planning, investment, and research approach also created quantifiable project development and delivery efficiencies enabling MDT to do more with less. As customer satisfaction survey results reveal,33 public perception and comments were very supportive of a rest area program grounded in monitoring and adjustment.

Figure 5-18: Rest Area Public Satisfaction 1997-2013

Source: TranPlanMT Public Involvement Surveys 1997-201334

Linkages to Other TPM Components

- Component 01: Strategic Direction

- Component 02: Target Setting

- Component 03: Performance-Based Planning

- Component 04: Performance-Based Programming

- Component 06: Reporting and Communication

- Component A: Organization and Culture

- Component C: Data Management

- Component D: Data Usability and Analysis

Step 5.2.5 Program/Project Level: Document the process

Document the process, including progress, outputs, outcomes, and any strategic adjustments and the reasoning behind these. This includes documentation for the purposes of internal operations, ensuring that the monitoring and adjustment process is replicable in future iterations of plans and throughout multiple planning efforts. It also includes steps toward gathering and organizing data (see Component C: Data Management and Component D: Data Usability and Analysis) in order to ensure that external reporting (Component 06) can be carried out in a sustainable and impactful way.

Examples

Several examples are offered here to illustrate how program/project level monitoring and adjustment processes and any subsequent changes to goals and targets are documented.

Program Delivery Monitoring at Southwestern Pennsylvania Commission (SPC)

SPC offers a large amount of documentation regarding each individual program area’s monitoring and adjustment processes. As an example, within its congestion management program, SPC implements strategies under divisions of demand management, modal options, operational improvements, and capacity improvements. SPC documents all of the performance measurements and associated monitoring calculations directly on its website.35Gathered here are all the associated studies, reports, and other tools SPC uses to highlight, analyze, and evaluate the effectiveness of various congestion management strategies implemented.36 As an example within this program, HOV lanes are listed as one strategy implemented to help reach congestion goals in the SPC region. SPC documents the reasoning behind the strategy and its relationship to the agency’s congestion targets. Before and after analysis is completed using results from monitoring traffic delay, and detailed information is included as to how calculations were reached and compared. This ensures that the same monitoring process can be reproduced indefinitely, allowing ongoing understanding of how investment in HOV lanes has enabled SPC to progress toward its congestion reduction target and its mobility goals.37

Program Delivery Monitoring at Missouri DOT

In the last decade, faced with increasing costs and decreasing revenue streams, the Missouri Department of Transportation (MoDOT) revisited its pavement management program. Based on financial constraints, the agency decided to focus its efforts on improving major highways, rather than spreading resources out over minor roads as well, as had been done according to a previous formula. MoDOT established a target that would benefit the most users per dollar spent and relaxed its target for overall pavement condition that included minor roads. As a result of this adjustment, fewer resources were allocated to the preservation of minor roads, and the percentage of minor roads in good condition decreased from 71% to 60% from 2005 to 2009.38 At the same time, however, MoDOT was able to respond to customers’ desires for smoother roads by significantly improving the condition of major routes, from 47% in 2004 to 87% in 2009. Currently over 89% of major highways are in good condition, but MoDOT again must recognize that this condition level will be difficult to maintain without additional resources.39 MoDOT used its Tracker performance measurement tool to document this adjustment to its performance targets and measures and to monitor and report the results, which are released quarterly.

Documenting the decision to focus more resources on major routes rather than on the system overall was key to MoDOT’s ability to measure progress moving forward and also to ensure stakeholders understood the adjustment. MoDOT measures its progress not only with typical performance measures but also through regular customer satisfaction surveys and focus groups to determine whether improvement projects are making the anticipated progress toward a satisfactory user experience—therefore communicating this strategy back to users using monitoring data was critical.40 This documentation shows how the programs and projects implemented as MoDOT’s pavement strategies are intended to impact progress toward performance targets.